OpenAI’s popular GPT-3 from last year showed that language can be used to instruct a large neural network to perform a variety of text generation tasks. Entering the new year, OpenAI is moving from pure text generation to image generation from text — its researchers today announce that they have trained a neural network called DALL·E that creates images from text captions for a wide range of concepts expressible in natural language.

DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions, using a dataset of text–image pairs. DALL·E is found to have a diverse set of capabilities including: creating anthropomorphized versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images.

A transformer-based language model, DALL·E receives both the text and the image as a single stream of data containing up to 1280 tokens and is trained using maximum likelihood to generate all of the tokens — one after another. The researchers say the training procedure allows DALL·E to generate an image from scratch and to regenerate any rectangular region of an existing image that extends to the bottom-right corner in a manner consistent with the text prompt.

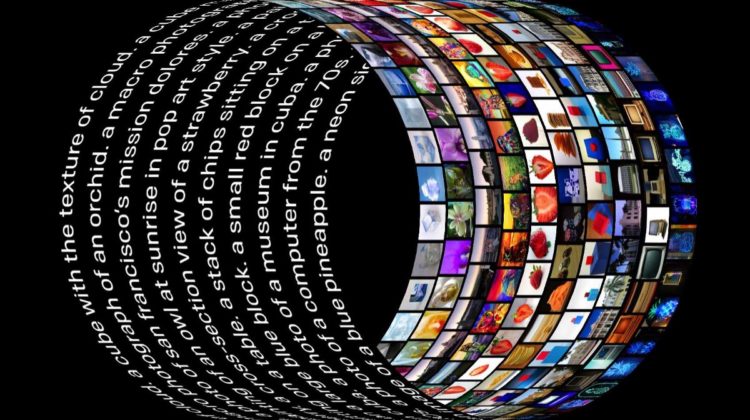

OpenAI today also introduces a neural network called CLIP (Contrastive Language–Image Pre-training) which efficiently learns visual concepts from natural language supervision. The researchers say CLIP can be applied to any visual classification benchmark by simply providing the names of the visual categories to be recognized, which is similar to the “zero-shot” capabilities of GPT-2 and 3.

Trained on a wide variety of images with a wide variety of natural language supervision that’s abundantly available on the internet, the network by design can be instructed in natural language to perform a great variety of classification benchmarks without directly optimizing for the benchmark’s performance.

CLIP is able to learn from unfiltered, highly varied, and highly noisy data, and CLIP models are significantly more flexible and general than existing ImageNet models, the researchers say. The results from their tests with CLIP show that agnostic pre-training on internet scale natural language — which has powered a recent breakthrough in NLP — can also be leveraged to improve the performance of deep learning for other fields like computer vision.