Modules needed: Keras, Tensorflow, Pandas, Scikit-Learn & Numpy

We are going to build a multi-layer LSTM recurrent neural network to predict the last value of a sequence of values, i.e., the TESLA stock price in this example.

Let’s load the data and inspect them:

import math

import matplotlib.pyplot as plt

import keras

import pandas as pd

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import LSTM

from keras.layers import Dropout

from keras.layers import *

from sklearn.preprocessing import MinMaxScaler

from sklearn.metrics import mean_squared_error

from sklearn.metrics import mean_absolute_error

from sklearn.model_selection import train_test_split

from keras.callbacks import EarlyStoppingdf=pd.read_csv("TSLA.csv")

print(‘Number of rows and columns:’, df.shape)

df.head(5)

The next step is to split the data into training and test sets to avoid overfitting and to be able to investigate the generalization ability of our model. To learn more about overfitting, read this article:

The target value to be predicted is going to be the “Close” stock price value.

training_set = df.iloc[:800, 1:2].values

test_set = df.iloc[800:, 1:2].values

It’s a good idea to normalize the data before model fitting. This will boost performance. You can read more here for the Min-Max Scaler:

Let’s build the input features with a time lag of 1 day (lag 1):

# Feature Scaling

sc = MinMaxScaler(feature_range = (0, 1))

training_set_scaled = sc.fit_transform(training_set)# Creating a data structure with 60 time-steps and 1 output

X_train = []

y_train = []

for i in range(60, 800):

X_train.append(training_set_scaled[i-60:i, 0])

y_train.append(training_set_scaled[i, 0])

X_train, y_train = np.array(X_train), np.array(y_train)X_train = np.reshape(X_train, (X_train.shape[0], X_train.shape[1], 1))

#(740, 60, 1)

We have now reshaped the data into the following format (#values, #time-steps, #1-dimensional output).

Now, it’s time to build the model. We will build the LSTM with 50 neurons and 4 hidden layers. Finally, we will assign 1 neuron in the output layer for predicting the normalized stock price. We will use the MSE loss function and the Adam stochastic gradient descent optimizer.

Note: the following will take some time (~5min).

model = Sequential()#Adding the first LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50, return_sequences = True, input_shape = (X_train.shape[1], 1)))

model.add(Dropout(0.2))# Adding a second LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50, return_sequences = True))

model.add(Dropout(0.2))# Adding a third LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50, return_sequences = True))

model.add(Dropout(0.2))# Adding a fourth LSTM layer and some Dropout regularisation

model.add(LSTM(units = 50))

model.add(Dropout(0.2))# Adding the output layer

model.add(Dense(units = 1))# Compiling the RNN

model.compile(optimizer = 'adam', loss = 'mean_squared_error')# Fitting the RNN to the Training set

model.fit(X_train, y_train, epochs = 100, batch_size = 32)

When the fitting is finished, you should see something like this:

Prepare the test data (reshape them):

# Getting the predicted stock price of 2017

dataset_train = df.iloc[:800, 1:2]

dataset_test = df.iloc[800:, 1:2]dataset_total = pd.concat((dataset_train, dataset_test), axis = 0)inputs = dataset_total[len(dataset_total) - len(dataset_test) - 60:].valuesinputs = inputs.reshape(-1,1)

inputs = sc.transform(inputs)

X_test = []

for i in range(60, 519):

X_test.append(inputs[i-60:i, 0])

X_test = np.array(X_test)

X_test = np.reshape(X_test, (X_test.shape[0], X_test.shape[1], 1))print(X_test.shape)

# (459, 60, 1)

Make Predictions using the test set.

predicted_stock_price = model.predict(X_test)

predicted_stock_price = sc.inverse_transform(predicted_stock_price)

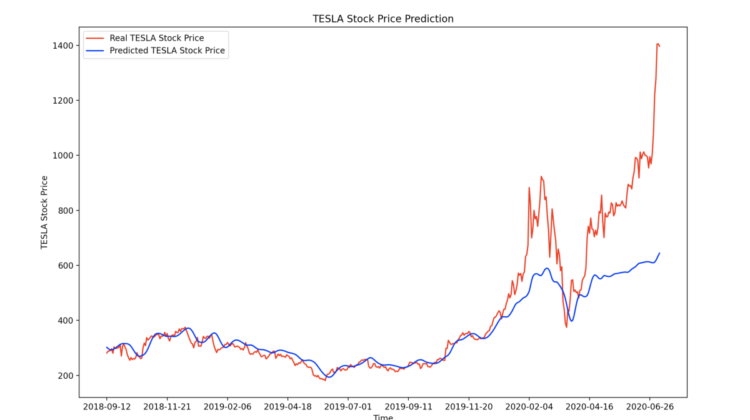

Let’s visualize the results now:

# Visualising the results

plt.plot(df.loc[800:, ‘Date’],dataset_test.values, color = ‘red’, label = ‘Real TESLA Stock Price’)

plt.plot(df.loc[800:, ‘Date’],predicted_stock_price, color = ‘blue’, label = ‘Predicted TESLA Stock Price’)

plt.xticks(np.arange(0,459,50))

plt.title('TESLA Stock Price Prediction')

plt.xlabel('Time')

plt.ylabel('TESLA Stock Price')

plt.legend()

plt.show()

Using a lag of 1 (i.e., the step of one day):