Abstract — What did I do in a nutshell?

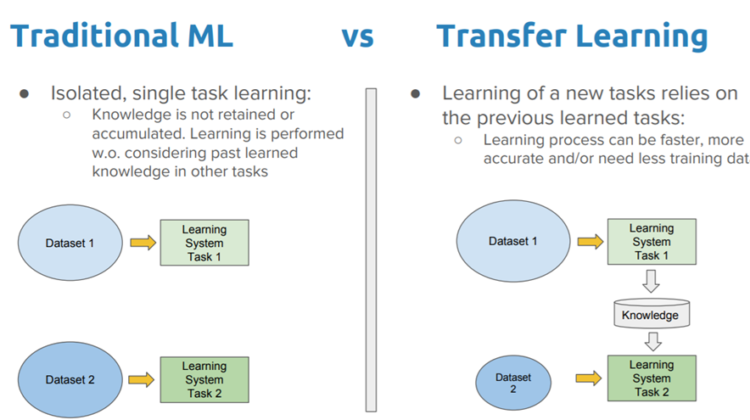

I called Inheritrain, is a inherited training, but one that we can manipulate again to work with our aditional features . In this document I want to explain how I developed a neural network model, inheriting characteristics from another already trained model.

"This practice consists of manipulating already built models, which serve as a basis, to build your own neural network."

Introduction — What is the problem?

I want to create a model that is based on another model already trained, to use it again with small modifications, freezing or deleting layers. With this practice we save a lot of computational time.

I am going to do this training for classify the CIFAR 10 dataset:

How did I solve the problem? Materials and Methods

To face this problem you have to have the statistical concepts and use of Keras, very clear. If not, it would be good if you did a good review of those topics.I had to review concepts of the Cartesian plane.

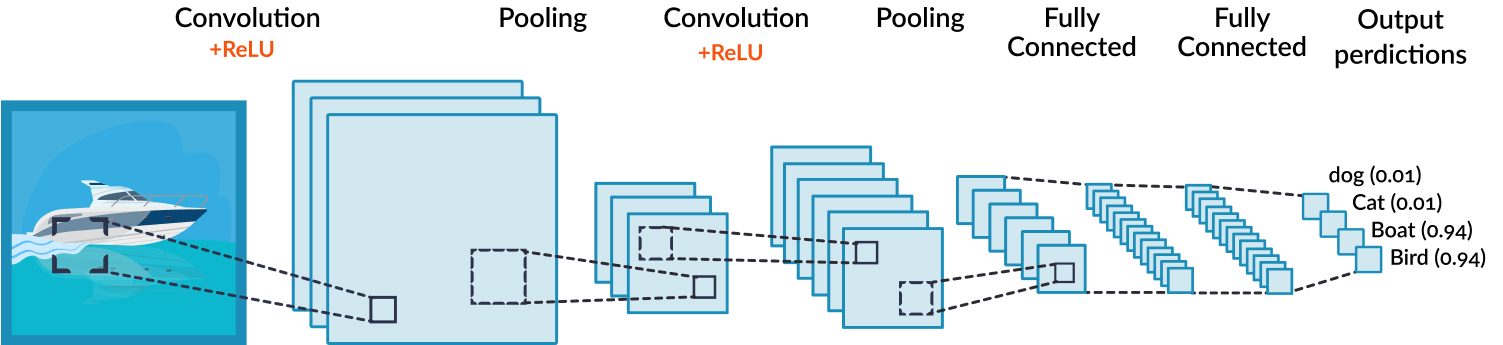

Understand how Python with NumPy represent a 32x32x3 arrays, and obviously understand the meaning of layers, convolutions, kernels, activation functions, etc.

The CIFAR-10 dataset consists of 60000 32×32 colour images in 10 classes, with 6000 images per class. There are 50000 training images and 10000 test images.

The dataset is divided into five training batches and one test batch, each with 10000 images. The test batch contains exactly 1000 randomly-selected images from each class. The training batches contain the remaining images in random order, but some training batches may contain more images from one class than another. Between them, the training batches contain exactly 5000 images from each class.

https://www.cs.toronto.edu/~kriz/cifar.html

# Load CIFAR10 Data

(X_train, Y_train), (X_test, Y_test) = K.datasets.cifar10.load_data()

# Keras Application expects a specific kind of input preprocessing

X_p = K.applications.inception_resnet_v2.preprocess_input(X)

# Add a file numbers from 0 to 9 categorically (CIFAR10 Categories)

Y_p = K.utils.to_categorical(Y, 10)

Available Aplications

Keras Applications are deep learning models that are made available alongside pre-trained weights. These models can be used for prediction, feature extraction, and fine-tuning.

https://keras.io/api/applications/

I select InceptionResNetV2, because it is one of the ones with the highest accuracy.

InceptionResNetV2 function

tf.keras.applications.InceptionResNetV2(

include_top=True,

weights="imagenet",

input_tensor=None,

input_shape=None,

pooling=None,

classes=1000,

classifier_activation="softmax",

**kwargs

)

# Transfer Learning Start

base_model = K.applications.InceptionResNetV2(include_top=False, weights=”imagenet”, input_shape=(299, 299, 3))

# Start inputs with 32×32 size

inputs = K.Input(shape=(32, 32, 3))

# Lambda layer that scales up the data to the correct size

input = K.layers.Lambda(lambda image: tf.image.resize(image, (299, 299)))(inputs)

# Base Model Layers

x = base_model(input, training=False)

x = K.layers.GlobalAveragePooling2D()(x)

x = K.layers.Dense(500, activation=’relu’)(x)

x = K.layers.Dropout(0.3)(x) # For Overfitting

outputs = K.layers.Dense(10, activation=’softmax’)(x)

# Mount the model

model = K.Model(inputs, outputs)

base_model.trainable = False # Freeze

optimizer = K.optimizers.Adam()

Adam, stochastic gradient descent method that is based on adaptive estimation of first-order and second-order moments.

# Compile the model

model.compile(loss=”categorical_crossentropy”, optimizer=optimizer, metrics=[“acc”]) # Accuracy

history = model.fit(X_train, Y_train,validation_data=(X_test,Y_test), batch_size=300, epochs=4, verbose=1)

# Save the model

model.save(‘cifar10.h5’)

What did I find out? Results

I usually do youtube and text searches. I try to see both materials since there is usually much more information in the text.

Es necesario tener claro las caracteristicas y uso de las redes neuronales con Keras. Sino es así, es necesario un repaso ahora mismo.

What does it mean?Discussion

It is very useful to use previously trained models to generate other models with high accuracy with little data.

Freeze the required layers

In Keras, each layer has a parameter called “trainable”. For freezing the weights of a particular layer, we should set this parameter to False, indicating that this layer should not be trained. That’s it! We go over each layer and select which layers we want to train.

from tensorflow.keras.utils import plot_model

plot_model(base_model, to_file=’model.png’)

In the image model.png you can see a large tree with connections, which is the representation of the layers of the neural network.

# Loop for print the list of the layers with index

for i, layer in enumerate(base_model.layers):

print(i, layer.name)

…

724 activation_390

725 activation_393

726 block8_7_mixed

727 block8_77_conv

…

In this way, it is easier to see in a structured way, which layers of the neural network, we are going to manipulate or freeze to improve accuracy.

# Example. Freeze the 402 Layer.

for in base_model.layers[:402]:

layer.trainable=False

for in base_model.layers[402:]:

layer.trainable=True

# Need to compile again.

optimizer=K.optimizer.Adam(1e-5)

model.compile(loss=”categorical_crossentropy”, optimizer=optimizer, metrics=[‘acc’])

history = model.fit(X_train, Y_train, validation_data=(X_test, Y_test, batch_size=300, epochs=4, verbose=1))

After freezing a few layers randomly, I see OverFitting behaviors and reduced accuracy. It is necessary to remove layers and test that the accuray improves.