In this article, I am trying to put together an understanding of the vanishing gradient problem(VGP) in a simplistic way so that it is easy to understand. Though prior understanding of neural networks will help to get it faster as few terms might not be familiar if you have never learned deep learning.

VGP is a very well researched topic and was first identified by Sepp Hochreiter in his Diploma thesis in 1991 (http://people.idsia.ch/~juergen/fundamentaldeeplearningproblem.html) and since many approaches have been used and proposed solutions to address the VGP.

In simple words VGP: Vanishing gradient problem is a problem when training specific neural networks with gradient-based methods (i.e. backpropagation). VGP makes it difficult to train the parameters of the network especially of the earlier layers of the network and it becomes worse as the number of layers gets increased.

Let us understand in little more in detail as the name suggests i.e. vanishing gradient so the gradient is approaching zero which means that the slope at that point or the first derivative is approaching zero. As we know that with backpropagation in neural networks the loss is propagated backward and the loss tends to decrease with each successive layer. By the time the loss is propagated back toward the first few layers, the loss has already diminished so much that the weights do not change much at all. With such a small loss being propagated backward, it is impossible to adjust and train the weights of the first few layers and this is VGP.

Based on the above definition we can see the this is not a problem with neural networks but a problem with gradient-based learning methods and this is driven by specific activation function(i.e. Sigmoid/TanH). Activation functions are sometimes referred to as squashing functions which is basically how an individual neuron decides whether or not its weight should trigger a propagation of that signal.

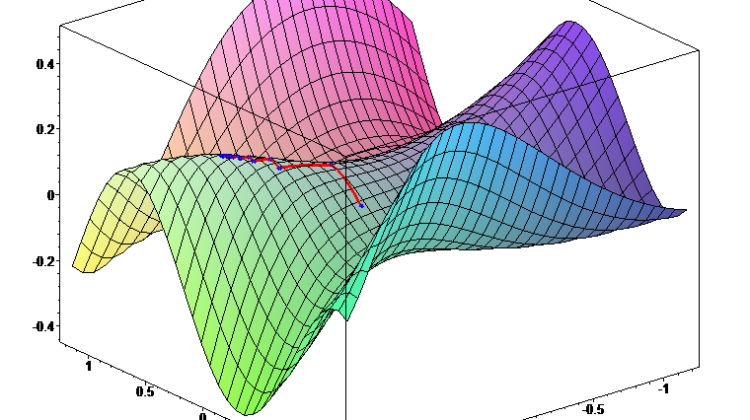

These activation functions(i.e. Sigmoid/TanH) “squash” the real line into a bounded interval which means it converts inputs to a very small output range in a nonlinear way, in the case of the sigmoid function it “squashes” inputs to lie between 0 and 1 which means that for inputs with sigmoid output close to 0 or 1, the gradient with respect to those inputs is close to zero that is leading to VGP. As a solution, we can use an activation function that doesn’t saturate inputs to such a small range so choosing a better activation function can work out well.

Rectified Linear Unit (ReLU) is the most popular choice as a solution to VGP as that ReLU function maps x to max(0,x) and is not squashing the inputs to small range though it squash all negative inputs to zero. Learn more around ReLU from this original paper by Vinod Nair and Geoffrey E. Hinton:-https://openreview.net/forum?id=rkb15iZdZB

VGP can have a significant impact when it comes to sequential data and recurrent neural networks(i.e. RNNs,) which means due to VGP RNNs are unable to learn from early layers which cause them to have poor long-term memory. To address this, Sepp Hochreiter who first identified the problem in 1991 came up with a solution which is a variation of the RNN, known as the long short-term memory (LSTM) network:-http://www.bioinf.jku.at/publications/older/2604.pdf

So there are a number of approaches to address the problem of vanishing gradient

In summary, VGP is one of the problems associated with the training of neural networks when the neurons present in the early layers are not able to learn because the gradients that train the weights shrink down to zero. This happens due to the greater depth of the neural network, along with activation functions with derivatives resulting in low value. The solution to this is achieved by modifying how the activation functions or weight matrices act and techniques to do so include alternative activation functions (i.e. ReLU), LSTM, GRU alternative weight matrices, multi-level hierarchies etc.