You did not watch Rashomon? Beware! Spoilers ahead!

This blog is joint work of Piotr Piątyszek and Jakub Wiśniewski

We regularly reference to Rashomon Effect in blogs and articles. What this psychological term represents is the backbone of our research. To prove it, we are going to deep dive into the origin of the Rashomon Effect.

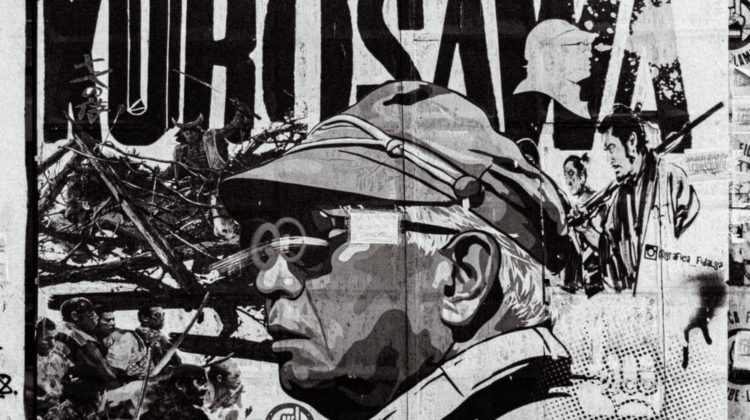

It all started with one movie directed by not very popular yet Akira Kurosawa. Rashomon was the masterpiece that gave him worldwide acknowledgment. Firstly it is a thrilling criminal story about murdered samurai. We were watching in tension as witnesses in a court had been telling their stories. Each next testimony brings new contradictions.

The perspective of this scene makes this movie so captivating. Kurosawa puts viewers in front of witnesses. You are the judge!

You listen to four people: the lumberjack who has found the body, a bandit, murdered samurai (through a medium), and his wife. The prime suspect is the infamous Tajōmaru, an outlaw with a long track record. After some analysis, you can see that every involved person is hiding something embarrassing to him. The stories were contradictory. Each viewpoint although concerning the same situation was totally different, skewed by the observer’s perspective, or even intentionally distorted. The reader might ask

Okay, it is obvious that the perspective matters but how does it affect the AI?

There are some useful lessons that can be transferred from a psychological Rashomon Effect to the world of AI.

Lesson 1

People creating AI models or any other project often do not want to see their faults. They are intentionally hiding flaws or unconsciously denying their existence. In science, we use the mechanism of peer review to deal with that. The good idea is to have auditors not involved in the project.

The trial ends, but there is no judgment. Viewers do not know who is guilty. But wait, as a viewer, you are a judge. Then you need to rule who is guilty. But you can’t be sure; stories are so different.

Lesson 2

Even if you used many explainable AI algorithms to examine a model’s behavior, you could not be sure how it works. Using white-boxes is the only solution to be sure that your project is free of flaws.

But the director brings a plot twist. The lumberjack has seen everything, but in front of a judge, he kept it silent. He was afraid of getting into trouble. But as someone not involved in a crime, the lumberjack could be the ideal auditor in our metaphor.

Lesson 3

The culture of your organization must be conducive to making mistakes or bringing them to sight. Else every defect will remain covered. In the context of machine learning, the creator can even use adversarial attacks to make the unfairness not visible on audits.

Kurosawa shows us that everyone is a hero in their own stories. Samurai said that he has committed suicide, bandit claimed that after a long and spectacular fight he killed the samurai, and from the view of the lumberjack their fight clumsy, and even both of them did not want to fight, but the samurai’s wife requested that duel.

Lesson 4

There is always some perspective, even in the AI models. Why? The answer lies in data. There is something that can go wrong at every step of the way. From the experiment design, data gathering, and processing the data can contain some bias. When we then try to fit the model on this skewed data the output will also be skewed. It won’t reflect the real state of the world but the projection of it.

In the end, let us take up some more specific subject. The trial should be fair, but from different perspectives, fairness is something different.

Fairness of a model

Fairness was referenced in this blog a couple of times, so we will assume that the core concepts of it are known to the reader. Let’s imagine that we want to hire some candidates for job openings in a company that wants to be fair. We have 2 equal population sizes in our town and HR department wants to reflect that in the future team. Part of the recruitment process was to solve a bunch of tasks. In the end, the candidate ends up with some score.

We want to hire only the best candidates. But there is a problem. The first population has better scores than the second one. What should one do to make a fair hiring decision? Take the best candidates where the majority of them is from the first population or get the top candidates from both populations to reflect the state of the world?

- From one perspective we don’t know if some past socio-economical factors weren’t the cause of the difference. In that case, some could argue that it would be fair to break this cycle and give the jobs to top scorers in each population.

- On the other hand from the perspective of someone from the first population who would have been given a job, it would be vastly unfair. Why would someone get the job if this person scored better than him/her?

Those questions of fairness are rather philosophical but it is crucial to make non-discriminative and responsible models that would be beneficial to society.

Who would have thought that we can learn this much about AI from a 70-year-old Japanese movie?

If you are interested in other posts about explainable, fair and responsible ML, follow #ResponsibleML on Medium.